Microsoft Fabric and Azure AI services

#MicrosoftFabric #AzureAI #Notebooks #SynapseML #Python #SentimentAnalysis #ChatCompletion #PowerBIReport

In this Article we will explore How we can use Azure AI Services in Microsoft Fabric to enhance data.

Basic way to use the GPT-3.5-Turbo and GPT-4 models

In this, we’ll discuss how to work with Azure AI Services using Microsoft Fabric Notebooks. To start, please create a Notebook in your Microsoft Fabric Workspace and follow the steps provided below.

#In this code example, I will show how to connect with the OpenAI

# API utilizing the Azure backend to work with a large language model

#like GPT-3.5-Turbo or GPT-4. The script establishes the API settings,

# engages in a conversation with the AI model and displays the outcome.

# Import the necessary modules: use `os` for managing environment variables

# and `OpenAI` for interacting with the OpenAI API.

import os

import openai

# Set the API type to "azure"

openai.api_type = "azure"

# Set the API version

openai.api_version = "API_VERSION"

# Get the Azure OpenAI endpoint from an environment variable

openai.api_base = os.getenv("AZURE_OPENAI_ENDPOINT")

# Get the Azure OpenAI API key from an environment variable

openai.api_key = os.getenv("AZURE_OPENAI_KEY")

# Make a request to the Chat Completion API with a conversation

# containing a system message and a user message

response = openai.ChatCompletion.create(

# Specify the engine, e.g., GPT-3.5-Turbo or GPT-4,

#as the deployment name chosen during deployment

engine="gpt-35-turbo",

messages= [

{

"role": "system",

"content": "Assistant is a large language model

trained by OpenAI."

},

{

"role": "user",

"content": "What is Lakehouse?"

}

]

)

# Print the full API response

print(response['choices'][0] ['message'] ['content'])

Prebuilt Azure AI services

Microsoft Fabric smoothly integrates with Azure AI Services, allowing data enrichment through pre-existing AI models without prerequisites. This option utilizes Fabric authentication for AI service access and charges usage to Fabric capacity.

Text Analytics

Text Analytics, an Azure AI service, allows you to conduct text mining and text analysis using Natural Language Processing (NLP) capabilities. We will discuss Text Analytics in SynapseML

Sentiment Analysis

#import synapse.ml.core: Import the core Synapse machine learning library.

import synapse.ml.core

# from synapse.ml.cognitive.language import AnalyzeText:

# Import the Analyze Text class from the synapse.ml library.

from synapse.ml.cognitive.language import AnalyzeText

from pyspark.sql.functions import col

df = spark.createDataFrame(

[

("This Article provides very useful information about Microsoft Fabric OpenAI Services. It is very detailed",),

("This Article provides very limited information about Microsoft Fabric OpenAI Services. It requires some more information",)

]

,

["text"])

#Instantiate the Analyze Text model with the appropriate input column,

# analysis type, and output column:

model = (AnalyzeText()

.setTextCol("text")

.setKind("SentimentAnalysis")

.setOutputCol("response"))

# Apply the model to the input Data Frame and

# Extract the sentiment result:

result = model.transform(df)\

.withColumn("documents", col("response.documents"))\

.withColumn("sentiment", col("documents.sentiment"))

display (result.select("text", "sentiment"))

Named Entity Recognition (NER)

df = spark.createDataFrame([

("en", "Mr. John Doe has an appointment with Dr. Jane Smith at ABC Clinic on July 15th, 2022, at 10:30 am")

], ["language", "text"])

# Create an instance of the Analyze Text model with the following configurations:

# - Input column: "text"

# - Analysis type: "Entity Recognition"

# - Output column: "response"

model = (AnalyzeText()

.setTextCol("text")

.setKind("EntityRecognition")

.setOutputCol("response"))

# Add two models. Transform the Data Frame:

# - "documents": Extract the "documents" field from the "response" column

# - "entity Names": Extract the "text" field from the "entities" objects inside the "documents" column

result = model.transform(df)\

.withColumn("documents", col("response.documents"))\

.withColumn("entityNames", col("documents.entities.text"))

display(result.select("text", "entityNames"))

We will explore a use-case that illustrates the entire process of ingesting data and creating reports for review.

Imagine you manage a healthcare facility and want to improve the quality of care based on patient feedback from their doctor visits. You have a lot of reviews to go through, and it can be difficult to identify the most relevant information and rank doctors accordingly.

To get started, simply link Lakehouse to your notebook and you are good to go!

We will use patient feedback dataset to review feedback and generate feedback reports with OpenAI. We will be using a tool called SynapseML to help us examine patient feedback.

SynapseML is a free resource that simplifies the creation of large, complex systems for analyzing information.

Microsoft Fabric is a platform that already includes the newest version of SynapseML and comes with pre-made artificial intelligence models, making it very easy to design intelligent and adaptable systems for different areas of interest.

For this exercise I have used patient feedback with following columns

[

{"_1":"PatientID","_2":"int"},

{"_1":"DoctorName","_2":"string"},

{"_1":"FeedbackComments","_2":"string"}

]

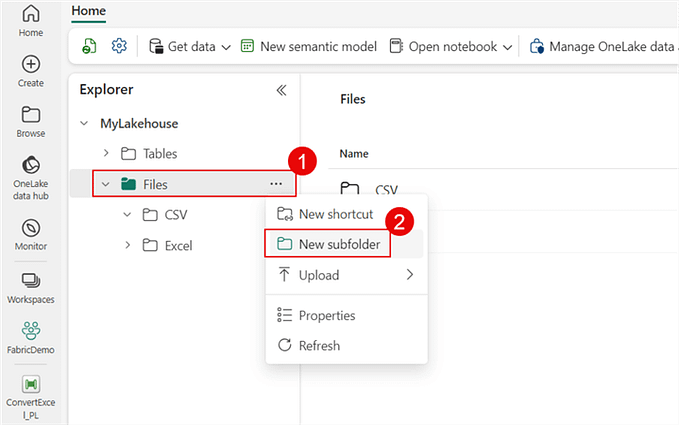

I have uploaded a sample dataset (assisted by OpenAI) in CSV format to the Files section of the Lakehouse. Then, I chose the "Load Table from File" option to create a new table within the Lakehouse.

Scenario 1

#Instantiate the AnalyzeText mode

# analysis type, and output column:

model = (AnalyzeText()

.setTextCol("FeedbackComments")

.setKind("SentimentAnalysis")

.setOutputCol("response"))

# Apply the model to the input Data Frame and

# Extract the sentiment result:

result = model.transform(df)\

.withColumn("documents", col("response.documents")) \

.withColumn("sentiment", col("documents.sentiment"))

display (result.select("FeedbackComments", "sentiment"))

Build a Power BI Report

Finally, we can write the results to a Lakehouse table and use Power BI direct lake mode to build a visual report.

patient_review_table_path = "Tables/patients_review"

# Create table from above generated results with sentiment analysis

result.write.format("delta"). mode("overwrite").option('overwriteSchema', 'true').save(patient_review_table_path)- Select OneLake data hub on the left side.

- Choose the Lakehouse linked to your notebook and click Open in the top right corner.

- Create a new semantic model by selecting patients_review and confirming.

- Generate a report by selecting or dragging fields onto the report canvas

Scenario 2

We have used Pre-built OpenAI functionalities to analyze patient feedback and generate sentiment analysis reports.

Our next step is to use the sentiment and feedback data to ask Azure OpenAI for suggestions on how to proceed.

# Load the first 10 rows of the "patients_review" table into a DataFrame

df = spark.sql("SELECT * FROM patients_review LIMIT 10")

# Define a UDF (User Defined Function) to create the prompt text for each row, combining two columns

process_prompt_column = udf(lambda x, y: f"Please suggest what is the next action to improve patient experience with the next visit in 2 lines: {x} {y}", StringType())

# Apply the UDF to the DataFrame, creating a new column "prompt" using the "FeedbackComments" and "sentiment" columns

df = df.withColumn("prompt", process_prompt_column(df["FeedbackComments"], df["sentiment"])).cache()

# Initialize the OpenAI Completion class with the required settings

completion = (

OpenAICompletion()

.setSubscriptionKey(key)

.setDeploymentName(deployment_name)

.setUrl("https://{}.openai.azure.com/".format(resource_name))

.setMaxTokens(100)

.setPromptCol("prompt")

.setErrorCol("error")

.setOutputCol("Suggestion")

)

# Apply the OpenAICompletion class to the DataFrame, generating AI suggestions

suggested_df = completion.transform(df)\

.withColumn("Follow-Up Steps", trim(lower(col("Suggestion.choices.text")[0])))\

.cache()

# Display the relevant columns from the completed DataFrame

display (suggested_df["FeedbackComments","Sentiment","Follow-Up Steps"])Conclusion

Throughout our discussion, we have explored various aspects of using AI models and services to enhance data analysis and reporting:

GPT-3.5-Turbo and GPT-4 Models: We looked at connecting to the OpenAI API through the Azure backend, which allows us to work with large language models like GPT-3.5-Turbo and GPT-4.

Prebuilt Azure AI Services: We discussed using readily available AI models such as Text Analytics, Sentiment Analysis, and Named Entity Recognition (NER) in Microsoft Fabric Notebooks. This method enables us to take advantage of pre-trained models without any additional setup.

Creating a Power BI Report: We demonstrated how to save results in a Lakehouse table and use Power BI’s direct lake mode to create a visual report, leading to insightful and interactive reports based on the analyzed data.

By combining these techniques and tools, data analysts and decision-makers can harness the power of AI to generate valuable insights, improve the quality of their analyses, and make more informed decisions.

Help links

Prebuilt Azure AI services in Fabric | Microsoft Fabric Blog | Microsoft Fabric

Azure OpenAI for big data — Microsoft Fabric | Microsoft Learn

How to work with the GPT-35-Turbo and GPT-4 models — Azure OpenAI Service | Microsoft Learn