Implementing and Utilizing Azure OpenAI Assistants

Azure OpenAI Assistants (Preview) offers a powerful platform for designing and developing AI assistants that are precisely customized to your unique needs.

Let’s break down what the Assistants API is all about.

What is the Assistants API?

The Assistants API is a tool for developers to build smart AI assistants. These assistants can do many tasks like answering questions, creating content, or even writing code.

Customizable AI Assistants:

Developers can tell the AI how to act and what it should do. For example, you can make an assistant that is very friendly and helpful or one that is more serious and factual.

Example: If you want an assistant to help with programming questions, you can set it to use a friendly tone and give detailed answers.

Access to Multiple Tools:

Assistants can use different tools at the same time to complete tasks. These tools can be made by OpenAI (like a code interpreter) or by developers themselves.

Example: If your assistant needs to run a piece of code and find a file at the same time, it can use a code interpreter to run the code and a file search tool to find the file.

Persistent Threads:

Assistants can remember conversations with users over time using Threads. These Threads keep the conversation history, making it easier to continue the chat later.

Example: If a user asks a question today and follows up next week, the assistant can remember the earlier conversation and give a better answer.

File Access and Creation:

Assistants can access and create different types of files during conversations. They can also make files like images or spreadsheets and refer to them in their messages.

Example: If your assistant makes a chart based on user data, it can create an image of the chart and send it to the user.

This article shares my insights on using Azure OpenAI Assistants, with a focus on a sample use case in healthcare. It covers the current state of the technology, and I plan to provide updates on the latest advancements in the next article

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

An individual AI assistant can utilize up to 128 tools, including a code interpreter and file search. You also have the flexibility to create your own custom tools using functions.

Definitions

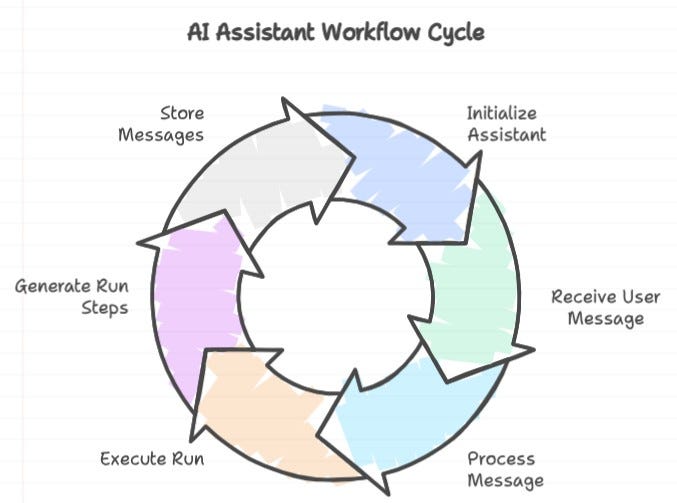

Thread: A conversation session between the Assistant and a user, where Messages are stored. These are automatically shortened to fit within the model’s context.

Message: A communication, either from the Assistant or the user, that can include text, images, and other files, stored as a list within the Thread.

Run: The process of activating an Assistant to perform tasks based on the content of the Thread, using its configuration and Messages within the Thread to call models and tools, and adding Messages as part of the process.

Run Step: A detailed sequence of actions performed by the Assistant during a Run, where tools may be used, or Messages created. Reviewing Run Steps helps in understanding how the Assistant reaches its final outcome.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

How to run Assistant

- Initiate a Run: Unlike creating a completion in the Chat Completions API, creating a Run is an asynchronous operation.

- Immediate Response: The operation returns immediately with the Run’s metadata.

- Initial Status: The status in the metadata will initially be set to

queued. - Assistant’s Operations: The status will be updated as the Assistant begins performing its tasks.

- Task Execution: This includes using tools and adding messages.

- Loop Check: To determine when the Assistant has completed processing, poll the Run in a loop.

- Status Monitoring: Initially, check for

queuedorin_progressstatuses. - Variety of Statuses: In practice, a Run may undergo various status changes, known as Steps.

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

Python Implementation:

Let’s delve into the implementation and execution of Azure OpenAI Assistants using Python. We’ll explore how to set up the environment, integrate the APIs, and utilize Python libraries to create and manage AI-driven assistants effectively. This discussion will cover essential coding practices to ensure seamless and efficient operation of the assistants

import os

import json

from openai import AzureOpenAI

client = AzureOpenAI(

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version=os.getenv("AZURE_OPENAI_VERSION"),

azure_endpoint=os.getenv("AZURE_OPENAI_ENDPOINT")

)

patient_assistant = client.beta.assistants.create(

name="Appointment Summary Assistant",

instructions=(

"You are an AI assistant designed to retrieve and summarize patient appointment details."

"When asked to provide an appointment summary, follow these steps:"

"1. Access the patient's appointment data."

"2. Extract relevant details such as appointment date, time, doctor, and purpose."

"3. Summarize the information in a clear and concise format."

"4. If the data retrieval is unsuccessful, notify the user and attempt to retrieve the information again."

),

tools=[{"type": "code_interpreter"}],

model=MODEL_NAME

)doctor_assistant = client.beta.assistants.create(

name="Doctor Available Appointments Assistant",

instructions=(

"You are an AI assistant designed to help users find available appointments with doctors."

"When asked to provide available appointment slots, follow these steps:"

"1. Access the doctor's schedule and check for open time slots."

"2. Filter the results based on the user's preferences, such as date, time, and specific doctor."

"3. Present the available appointments in a clear and organized manner."

"4. If no appointments are available, suggest alternative dates or doctors."

"5. If the data retrieval is unsuccessful, notify the user and attempt to retrieve the information again."

),

tools=[{"type": "code_interpreter"}],

model=MODEL_NAME

Once assistants are created, we can retrieve a list of all the assistants under the model and access a specific assistant by name to run it.

assistant_client = AzureOpenAI(

api_key=API_KEY,

api_version=API_VERSION,

azure_endpoint=API_ENDPOINT

)

def get_assistants_list(order: str = "asc", limit: int = 5) -> List:

assistants = assistant_client.beta.assistants.list(

order=order,

limit=limit,

)

return assistants.datadef create_assistant(assistant_client, assistant_name: str,instructions: str, model_name: str):

# This function creates an assistant using the provided client, name, instructions, and model.

# Call the create method on the beta.assistants attribute of the assistant_client.

# Pass the system instructions, model name, and tool configuration to this method.

assistant = assistant_client.beta.assistants.create(

instructions=system_instructions, # Instructions for the assistant.

model=model_name, # Model to be used by the assistant.

tools=[{"type": "code_interpreter"}], # List of tools, currently just a code interpreter.

name=assistant_name # Name for the new assistant.

)

# Return the created assistant object.

return assistant

def get_assistant_by_name(assistant_client, assistant_name: str):

"""

Gets an existing assistant.

Args:

assistant_client: The client object to interact with the assistant service.

assistant_name (str): The name of the assistant, i.e., use case.

Returns:

assistant: The assistant object if found.

Raises:

AssistantNotFoundException: If the assistant with the given name is not found.

"""

# Retrieve the list of all existing assistants using the provided client.

existing_assistants = get_assistants_list()

# Create a dictionary mapping assistant names to their respective assistant objects.

assistant_names = {assistant.name: assistant for assistant in existing_assistants}

# Check if the requested assistant name exists in the dictionary.

if assistant_name in assistant_names:

return assistant_names[assistant_name]

appointment_assistant=get_assistant_by_name("Appointment Summary Assistant")

# get assitant start Thread and attach assistant— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

Create Thread

# Create a new thread using the assistant client

new_thread = assistant_client.beta.threads.create()

# Add a message to the newly created thread with the role of "user" and the provided content

assistant_client.beta.threads.messages.create(

thread_id=new_thread.id, # Using the ID of the newly created thread

role="user", # Role of the message sender

content=data # Content of the message /Input

)

# Run the assistant for the created thread and specify the assistant ID

assistant_run = assistant_client.beta.threads.runs.create(

thread_id=new_thread.id, # Using the ID of the newly created thread

assistant_id=appointment_assistant.id # ID of the assistant to run

) — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

Instead of calling a specific assistant, we can use another assistant to orchestrate the process by selecting the appropriate one based on the query’s context.

Create Planner to Assist with Assistants

# Name of the planner assistant

planner_assistant_name = "appointment_assistants_planner"

# List of agents available for use

agent_arr = ["patient_appointment_assistant", "doctor_appointment_assistant"]

# String to accumulate agent names

agent_string = ""

# Loop through the agent list to create a formatted string of agent names

for item in agent_arr:

agent_string += f"{item}\n"

# Instructions for the user proxy agent

instructions_pa = f"""

As a user proxy agent, your primary function is to streamline dialogue between the user and the specialized agents within this group chat.

You have access to the following agents to accomplish the task:

{agent_string}

If the agents above are not enough or are out of scope to complete the task, then run send_message with the name of the agent.

When outputting the agent names, use them as the basis of the agent_name in the send message function, even if the agent doesn't exist yet.

Run the send_message function for each agent name generated.

Do not ask for follow-up questions, run the send_message function according to your initial input.

Plan:

1. patient_appointment_assistant extracts Patient appointment information

2. doctor_appointment_assistant analyzes doctor appointment info

"""

# List of tools available

tools = [

{

"type": "code_interpreter"

},

{

"type": "function",

"function": {

"name": "communicate_with_assistant",

"description": "Communicataton to Assitants with messages from this Assistant in this chat.",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The query to be sent",

},

"agent_name": {

"type": "string",

"description": "The name of the agent to execute the task.",

},

},

"required": ["query", "agent_name"],

},

},

},

]from typing import Dict, Optional

# Define the agents and their corresponding threads.

agents_threads: Dict[str, Dict[str, Optional[str]]] = {

"patient_appointment_assistant": {"agent": patient_appointment_assistant, "thread": None},

"doctor_appointment_assistant": {"agent": doctor_appointment_assistant, "thread": None},

}

def communicate_with_assistant(query: str, agent_name: str) -> str:

"""

Communicate with the specified assistant agent.

Args:

query (str): The query to send to the assistant.

agent_name (str): The name of the assistant agent.

Returns:

str: The response from the assistant agent.

"""

# Check if the specified agent exists in the agent array.

if agent_name not in agents_threads:

print(

f"Agent '{agent_name}' does not exist. This means that the multi-agent system does not have the necessary agent to execute the task. *** FUTURE CODE: AGENT SWARM***"

)

return ""

# Retrieve the recipient information for the specified agent.

recipient_info = agents_threads[agent_name]

# If there's no thread object, create one.

if not recipient_info["thread"]:

thread_object = assistant_client.beta.threads.create()

recipient_info["thread"] = thread_object

# Dispatch the message to the specified agent.

return query_with_message(query, recipient_info["agent"], recipient_info["thread"])import json

import time

from openai.types.beta import Thread, Assistant

def get_available_functions(agent: Assistant) -> dict:

available_functions = {}

print(agent.tools)

for tool in agent.tools:

if hasattr(tool, "function"):

function_name = tool.function.name

print(function_name)

if function_name in globals():

available_functions[function_name] = globals()[function_name]

else:

print("This tool does not have a 'function' attribute.")

return available_functions

def create_user_message(thread: Thread, message: str):

return assistant_client.beta.threads.messages.create(

thread_id=thread.id, role="user", content=message

)

def create_run(thread: Thread, agent: Assistant):

return assistant_client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=agent.id,

)

def retrieve_run_status(thread: Thread, run_id: str):

return assistant_client.beta.threads.runs.retrieve(thread_id=thread.id, run_id=run_id)

def submit_tool_outputs(thread: Thread, run_id: str, tool_outputs: list):

return assistant_client.beta.threads.runs.submit_tool_outputs(

thread_id=thread.id, run_id=run.id, tool_outputs=tool_outputs

)

def get_tool_responses(run, available_functions: dict) -> list:

tool_responses = []

if run.required_action.type == "submit_tool_outputs" and run.required_action.submit_tool_outputs.tool_calls is not None:

tool_calls = run.required_action.submit_tool_outputs.tool_calls

for call in tool_calls:

if call.type == "function":

if call.function.name not in available_functions:

raise Exception("Function requested by the model does not exist")

function_to_call = available_functions[call.function.name]

tool_response = function_to_call(**json.loads(call.function.arguments))

tool_responses.append({"tool_call_id": call.id, "output": tool_response})

return tool_responses

def query_with_message(message: str, agent: Assistant, thread: Thread) -> str:

available_functions = get_available_functions(agent)

create_user_message(thread, message)

run = create_run(thread, agent)

while True:

while run.status in ["queued", "in_progress"]:

run = retrieve_run_status(thread, run.id)

time.sleep(1)

elif run.status == "failed":

raise Exception("Run Failed. ", run.last_error)

else:

messages = assistant_client.beta.threads.messages.list(thread_id=thread.id)

return messages.data[0].content[0].text.valueHow to call Assistant Planner

assistants_planner = assistant_client.beta.assistants.create(

name=planner_assistant_name, instructions=instructions_pa, model=MODEL_NAME, tools=tools

)thread = assistant_client.beta.threads.create()

user_message = "Give me appointments of patient name XYZ"

message = query_with_message(user_message, assistants_planner, thread)

print(message)In our upcoming article, we will delve into the topic of file searching using assistants. We will explore the latest updates and features that enhance the efficiency and effectiveness of these assistants with knowledge base.

🙏Thank you for reading and Happe Learning 🙂

— — — — — — — — — — — — — — — — — — — — — — — — — — — — — — —

Reference Links

Assistants overview — OpenAI API

How to create Assistants with Azure OpenAI Service — Azure OpenAI | Microsoft Learn

azureai-samples/scenarios/Assistants at main · Azure-Samples/azureai-samples (github.com)